#2: Provably Safe *Against* AI?

Accountability for cybersecurity failures is nearly

nonexistent.—David Chapman (@Meaningness)

But also

The cost of cybercrime is projected to hit an annual $10.5 trillion by 2025, according to Cybersecurity Ventures. By the same token, Gartner analysts predict that over the next two years, 45% of global organizations will be impacted in some way by a supply chain attack. [Forbes]

Ten trillion by 2025 sounds like an awful big number. And it’s supposed to be 8 trillion for this year, almost 8% of world GDP. So either consumers are eating all the costs or there’s at least some accountability for companies, if not as legal liability, at least as lost profits.

With AI “democratizing” hacking skills, the difference two years from now could be a lot more than a mere 2 extra trillion. There’s AI on all sides of the problem, but offense tends to be asymmetrically easier.

A friend who works at a big tech company says there won’t need to be regulation mandating adoption of formal methods. At least not by corporations because with the proliferation of AI-enhanced hacking, the ones who don’t will simply go out of business. Too optimistic?

Cyber Insurance

I did some digging around "cyber insurance" premiums, claims, and payouts, to get a proxy baseline to look back next year and see if things really did get that bad that fast. I wanted to turn that into a bunch of neat prediction markets with crisp resolution criteria, but I didn't. [It could be your chance!]

Even without that much help from AI, things have already been getting tense, the number and damage of cyber incidents has been growing, and insurers have been raising premiums to keep up.

And to spend less on payouts, they're also putting pressure on companies to be more careful. Unless you can show them that you're taking some base amount of precaution, you just won't qualify for insurance, and that bar will keep rising.

That's "market legislation" happening in a way that on first look seems like it could be helpful, and even be able to adapt faster than regulation. @CISAgov doesn't seem as confident that the market will just sort itself out though, and is partnering with Stanford's Empirical Security Research Group to do some data analysis on cybersecurity insurance and evaluate the effectiveness of different approaches.

I imagine that in a best-case scenario they might use the output of that analysis and do some mechanism design to speed up internalization of costs and protect infrastructure and the wider economy against "catastrophic cyber risk".

I'm skeptical. Improve their cybersecurity practices somewhat? Probably. "Update all the way" and go full formal methods? Unlikely.

Secure By Design

Following Biden's Executive Order on AI, CISA also released its own Roadmap for Artificial Intelligence, focused on securing critical infrastructure, protecting frontier models from adversaries, protecting citizens and infrastructure from frontier models, and use AI as much as possible (responsibly!) in the government.

I'll spare you the details, but through hours of traversing an infinitude of links and PDFs on a number of government agencies and a bunch of different initiatives within each, I found ~zero mention of "formal verification" and "formal methods" that I felt could be meaningfully attributed to any of the recent programs related to AI and the Executive Order, including from CISA, NIST, and others.

Even strings like "Software Must Be Secure by Design, and Artificial Intelligence Is No Exception" which got me all excited at first, turned out to be a lot more reactive incident-response type stuff than the Correctness-by-Construction I was hoping it to be sort of equivalent to.

It wasn't an exhaustive search, but I came out of it a lot less enthusiastic about the plausibility of regulators and standards bodies going "let's apply these techniques from aerospace across all the critical infrastructure things!"

Airworthiness Certification

Not that aviation is the shining role model I had in mind either.

First look I had into it and apparently some guy managed to hack into flight controls mid-flight and (allegedly) make the plane climb to a higher altitude, just for the lulz. (And, presumably, to have authorities take it seriously and do something about airplanes not having avionics airgapped from entertainment systems.)

Also, hard to know what the precise current state of affairs is on paper and in practice given the sprawling number of diffs published as natural language PDFs making changes to pre-existing natural language PDFs and legal injunctions and revocations and deadline extensions etc. I really thought airgapping was a no-brainer; I'm still shocked it has definitely not been the case at least at some point in recent history; and it's not clear if that has changed.

I haven't checked the primary sources yet (sci-hub didn't help and 300+ USD for each of dozens of PDFs is still not something I'm ready to fork over for this), but while there are lots of detailed security practices and a whole standards document specifically about formal methods, it doesn't seem to be mandatory:

Are the activities described in the DO-178C/ED12C document set mandatory?

The activities discussed in the documents were never intended as the sole means of satisfying the objectives; instead, the activities are simply one means that may be suitable to meet those objectives. It is the responsibility of the applicant to plan and conduct the proposed activities that will be used to satisfy the objectives and, if necessary, to obtain agreement of those activities with the certification authority

— https://eurocae.net/media/1740/ftp1028_3.pdfDO-178C removes explicit reference to the use of formal methods as an alternative method to satisfy DO-178C objectives, but instead cites the use of assurance cases to provide adequate confidence and evidence that a product or process satisfies its requirements. DO-178C defines an assurance case as a technique in which arguments are explicitly given to link the evidence to the claims of compliance with the system safety objectives.

— https://ntrs.nasa.gov/api/citations/20120016835/downloads/20120016835.pdf

Nor very highly regarded by industry practitioners:

In theory, Formal Methods can undoubtedly assist the development and verification of complex programs. At the same time, there are practical limits to the application of Formal Methods. Combine these facts and most practitioners agree that Formal Methods are the least understood and most subjective of the new technologies associated with aviation-related software regulations.

— https://afuzion.com/do-333-introduction-formal-methods/

My naive thinking coming into this was "I haven't observed any global coordinated 9/11s from autopilot botnets of hijacked commercial airplanes yet, and I've heard of formal verification in aerospace, so they might be doing something right that could be leveraged as a strong precedent that wouldn't take much to extend to more industries like securing access to wetlabs for biosynthesis, and it would also drive demand for research, tooling, and training to scale up the applicability of formal methods."

Maybe, I prayed, the increased level of competence could lighten the herculean effort needed to make something like Davidad’s Open Agency Architecture work?

Not nearly as low-hanging as I wished.

Offense-Defense, Human-AI Permutations

Humans attacking with AI

Will a major cyberattack, virus, worm, etc. that uses LLMs

in some important way occur before January 1st, 2025? is at 70% on MetaculusFrom Palisade Research:

We’ve demonstrated that GPT-4 can leverage known vulnerabilities to achieve remote code execution on unpatched Windows 7 machines.

We plan to explore how AI systems could conduct reconnaissance, compromise target systems, and use information from compromised systems to pivot laterally through corporate networks or carry out social engineering attacks.

Also, arms race of drone swarms taking autonomous kill

decisions is heating up. Not sure this still qualifies as “humans attacking with” though.

Humans defending with AI

Establish an advanced cybersecurity program to develop AI tools to find and fix vulnerabilities in critical software, building on the Biden-Harris Administration’s ongoing AI Cyber Challenge. Together, these efforts will harness AI’s potentially game-changing cyber capabilities to make software and networks more secure.

— Zvi Mowshowitz’s summary of the Biden Executive Order on AI

Humans defending AI

Humans attacking AI

AI attacking humans

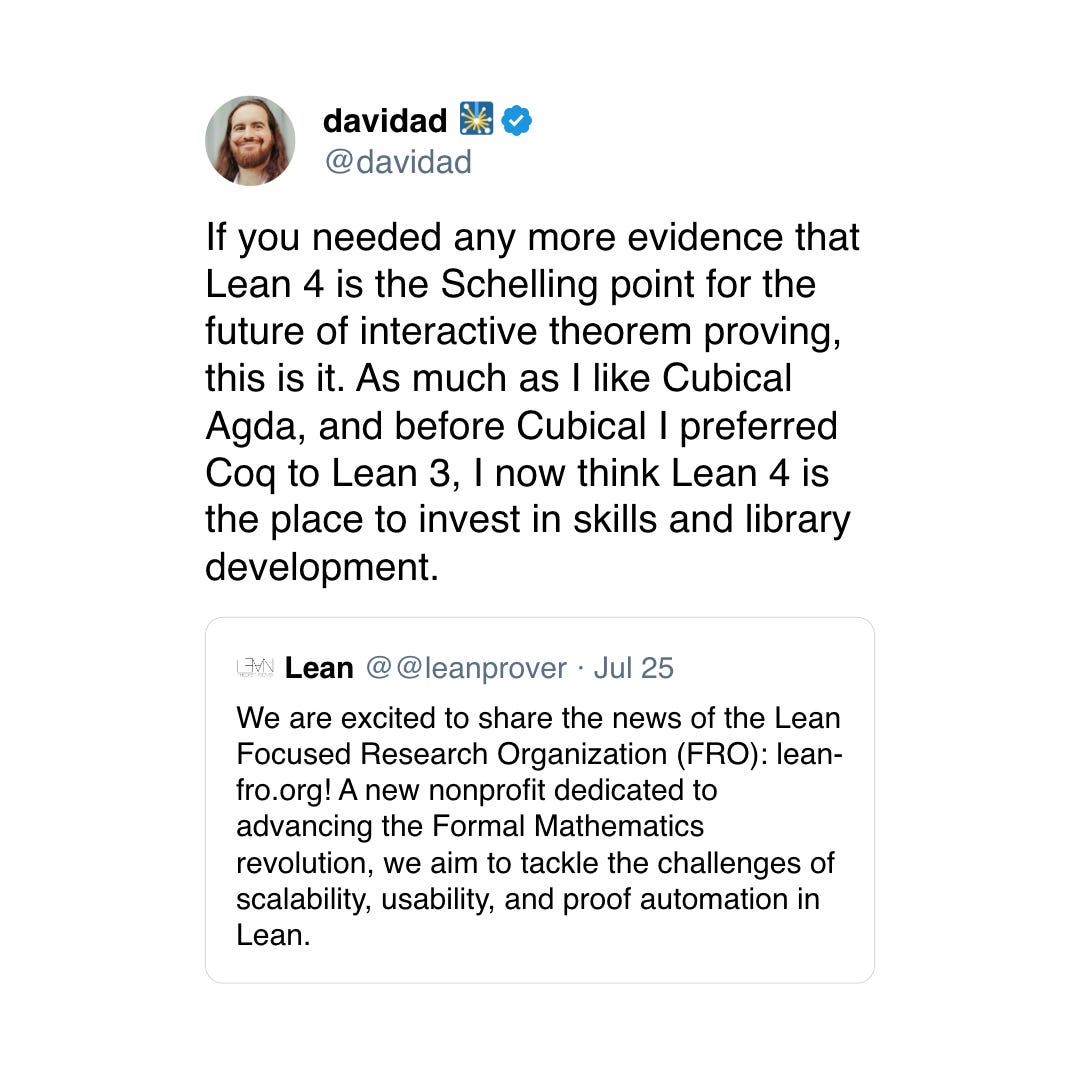

Picking a Theorem Prover

“Social proof” works for me!

Get Involved

Public Call for Interest in Mathematical Alignment

everything is okay

Beautiful short story I found the other day from tammy.

i wonder if someone out there cares about being uploaded. i wonder if their preferences have been satisfied, and if so how. what i am pretty confident about, is that whatever the situation, somehow, they are okay.

— https://carado.moe/everything-is-okay.html